Over dinner the other night, a friend and I were talking tech. Every year, we review what tools we’re using, what’s working, what’s not. It led to a chat (no pun intended) about AI. And, I learned something new!

In the early days of telecommunications, Morse code was a marvel of its time (1835ish). It revolutionized communication, enabling people to send messages across vast distances with unprecedented speed and efficiency. Yet, one fascinating aspect of Morse code that I learned about last night was the very human element embedded within it. Skilled operators could identify who was sending a message based on the unique rhythm and touch of the sender—a phenomenon known as “a person’s hand.”

Each telegrapher developed a distinctive style—a cadence, pressure, or subtle nuance in their signaling—that turned the mechanical act of tapping into something deeply personal and human. This individuality meant that even in a highly mechanized system, the human fingerprint was unmistakable. It’s a compelling reminder of how technology, when wielded by humans, can become an extension of us rather than a replacement.

Even in a highly mechanized system, the human fingerprint was unmistakable.

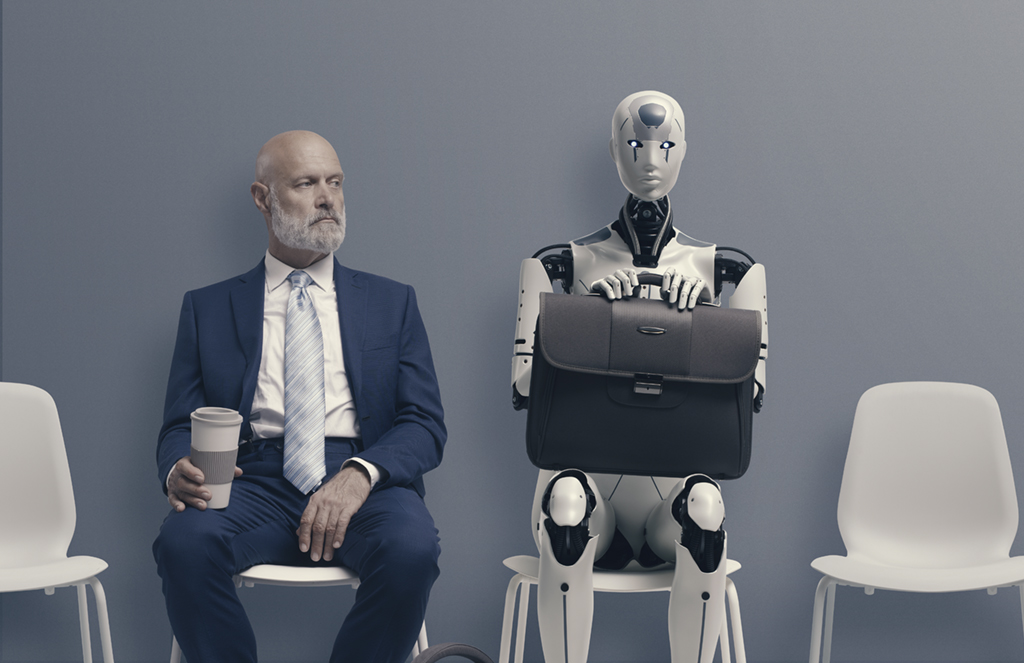

Fast forward to 2025, and we find ourselves in the era of AI-empowered employees, or what The Josh Bersin Company calls the “Superworker.” This concept describes individuals who harness the power of AI to amplify their creativity, productivity, and value. With AI as an ally, workers can scale their impact in ways once unimaginable—much like telegraphers scaling communication in the 19th century. But there’s a crucial caveat here: AI must remain a tool, not a proxy.

AI is like the telegraph key: an instrument that extends human capability.

AI offers transformative potential. It can process massive datasets in seconds, generate insights, and automate repetitive tasks. When used strategically, it empowers humans to focus on higher-order work—solving complex problems, innovating, and creating. In this sense, AI is like the telegraph key: an instrument that extends human capability. But if we’re not careful, AI risks becoming something more agentic—an independent actor that begins to shape decisions and actions without human oversight or intervention. This is one area where caution is required.

The historical lessons of Morse code teach us that even in the most technological systems, the human element must remain central. The unique “hand” of the telegrapher was not a liability; it was a strength, a differentiator. Similarly, in today’s AI-driven workplaces, it’s the uniquely human traits—judgment, empathy, creativity, and curiosity—that must steer the ship. When we relinquish too much control to AI, we risk losing the nuance and individuality that only people can bring.

Agentic AI—systems that act independently of human input—could undermine the very essence of human-centred work. Decisions made in a black box of algorithms, devoid of context or ethical grounding, can lead to unintended consequences. Check out this recent news bit about Goldman Sachs on The Hustle (paragraph 3). Worse, over-reliance on AI could erode the skills and instincts that make us uniquely capable. The Superworker’s power lies not in deferring to AI but in leveraging it, using it as a force multiplier rather than a substitute. And, of course, this is not a binary good/bad scenario. There are infinite possibilities and opportunities with AI, as there are risks and liabilities.

There are infinite possibilities and opportunities with AI, as there are risks and liabilities.

Those working in STEM fields must recognize the essential role of the humanities in shaping the outcomes of their innovations. The knowledge and theories of humanities—ethics, philosophy, history, and sociology—provide critical context for applying STEM and AI outputs responsibly (Federspiel et al., 2023) and equitably. Without this integration, we risk creating tools and systems that lack the human-centred perspective needed to truly benefit society, not just a select few.

For leaders, the imperative is clear: foster a culture where AI is seen as a tool to elevate human potential, not a proxy that replaces it.

For leaders, the imperative is clear: foster a culture where AI is seen as a tool to elevate human potential, not a proxy that replaces it. Invest in training that helps employees develop the skills to collaborate with AI systems effectively. Prioritize ethical considerations in AI deployment to ensure transparency, fairness, and accountability. And most importantly, encourage a mindset that celebrates the human “hand” in every outcome. For individuals, using AI might be a great idea generator or draft starter, but ensure YOUR voice comes through. My friend told me that more and more he is getting emails from staff and clients that are clearly AI generated and devoid of the human personalities sending them.

As we navigate this new era, let’s remember that the opportunity is not about replacing the human touch but amplifying it. AI, for all its capabilities, must remain a tool in our hands, not the other way around. The future of work belongs to those who understand this balance, ensuring that technology serves humanity, not the reverse.

References:

Gilby, E., Ammon, M., Leow, R., & Moore, S. (2022). Open research and the arts and humanities: Opportunities and challenges.

Federspiel, F., Mitchell, R., Asokan, A., Umana, C., & McCoy, D. (2023). Threats by artificial intelligence to human health and human existence. BMJ global health, 8(5), e010435.